Using Microsoft Technologies for the FIRST 2007 robotics competition

I have been meaning to blog about one of the coolest projects I have been involved with for a while now but I have been too busy to do so. Back in June of 2006 I got involved with Bob Pitzer (Botbash), Chris Harriman and Joel Meine, in providing a software/hardware solution for the FIRST Robotics Competition for their upcoming 2007 season. FIRST is a non profit organization that is dedicated to teaching young minds about science and technology through several fun filled robotics competitions. Make sure you check out their web site to see how you might be able to get involved in this great program. Also check out the 2007 video archive to see how exciting these events can be. Make sure you pay attention to the computer graphics that are superimposed over the live video because that is what we built!

The software and hardware we built was used to manage multiple 3 day events over a months time frame. This ended up being around 40 events across the US in roughly 30 days. Each event had anywhere from 30 to 70 teams competing. Each day of the event had to be executed in an efficient manor in order to complete the tournament style competition. The system was designed at a high level to do the following:

- Lead an event coordinator through the steps of managing a tournament

- Maintain a schedule of matches over a 3 day period

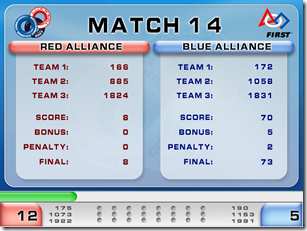

- Inform the audience of match scoring in real time

- Broadcast live video mixed with real time match score information to the web

- Inform the other competitors in the Pit area of upcoming matches, match results, and real time team ranking details as each match completes.

- Control the field of play from a central location

- Gather scoring details from judges located on the field

- Provide periodic event reports for online viewing as the tournament progresses

All of this was done using Microsoft .Net along with various open source .Net projects to speed up the development process and provide a robust system that was easy to use by various volunteers.

I used various pre-built components in order to build this system. Since this application needed to interface with multiple hardware components and provide a rich user interface a Windows Forms application was going to be required. I chose the Patterns and Practices Smart Client Software Factory (SCSF) as the basis for the Windows Forms framework. I had used this Framework in a previous project and I knew it would give me the modular design I needed to accomplish the functional goals of the design. I wanted a solution that allowed for me to do a lot of Unit Testing as I was going to be the only developer doing the work and the amount of QA testing was going to be very small. Since the SCSF used a Model View Presenter pattern I knew that testing would not be an issue. Also SCSF uses a dependency injection pattern that would also lend itself very well for unit testing. Another benefit of the dependency injection pattern was that I could mock out some of the hardware interfaces so that I did not have to have a fully functional robot arena in my home office! I actually developed the hardware interface without ever connecting to the hardware on my development machine. This was done by establishing a good interface and using a mock implementation of this interface to complete all the business logic without having any hardware. Then at a later date we implemented the real hardware layer and even to this day I use the mock implementation for all development work since I do not have an arena in my office.

For the data access layer I choose to use SubSonic as it provided a very fast way to generate the data access layer from a database schema. Using SubSonic gave me the flexibility to grow the data model really fast as the solution emerged over time. The database back end was SQL Server Express 2005. Since the solution only required a small set of clients and it had to be disconnected from the Internet SQL Server Express was right for the job.

Deployment of the application was done with ClickOnce in a full trust environment. This enabled me to make changes to the application throughout the tournament and the software on each playing field computer remained at the most recent version. The click once deployment also managed the upgrade process for any database changes as well.

The central control of the field of play was handled by a single .Net Win Forms application that interfaced to Programmable Logic Controllers (PLC) via a third party managed library. This library allowed for me to set PLC memory locations as well as monitor locations without having to worry about the TCP/IP communications protocol. This was a great time saver as I could concentrate on high level business value rather than low level communications. Since the low level communications was not required I did not have to spend a lot of time with debugging hardware/software integration problems.

Another key area of integration was providing a Hardware UI that consisted of LCDs and Buttons that enabled a field operator to manage the match process without using the computer keyboard or mouse. This was done using a serial port communicating to a BX24 from Netmedia. The user would actuate buttons on the hardware UI in order to start or stop the match as well as many other tournament related functions. The hardware UI would lead the operator to the next step by flashing the most appropriate button for the current point of the match. This made the operators job a lot easier.

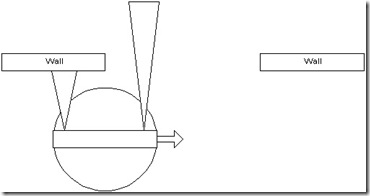

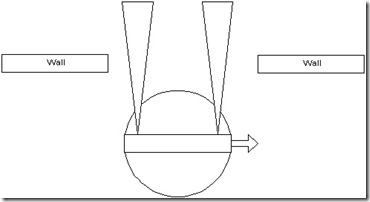

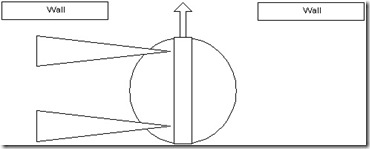

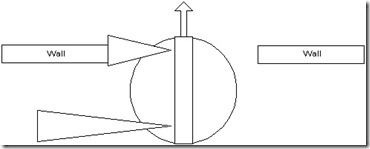

The audience needed to be informed about what is going on during the event. An announcer was always present at these events but the audience also needed visual cues that made it apparent what was going on. So I created a win forms audience display application that would provide the detail the audience needed. This detail was not only displayed to the live audience but it was also broadcast over the Internet to individuals that where not able to attend physically. This audience display showed live video as well as match statistics mixed together on one screen (you can see this in action in the video links I mentioned above). This screen was projected up onto a huge screen so all audience members could see with great ease. The live video mixing was done using a green screen technique that is often used with the weatherman on local news stations. Basically a green color is used in a color keying process to superimpose the live video over the green color. The screen snapshots below give you an idea of the type of information that was presented to the audience as well as the web broadcast.

Well I could go on and on about details of the application we wrote to make the 2007 FIRST FRC event a great success but I think I will save it for a set of later posts. Also I have been working on the software for the 2008 season that uses WPF for an even more richer user experience (can anyone guess I used some animations!).